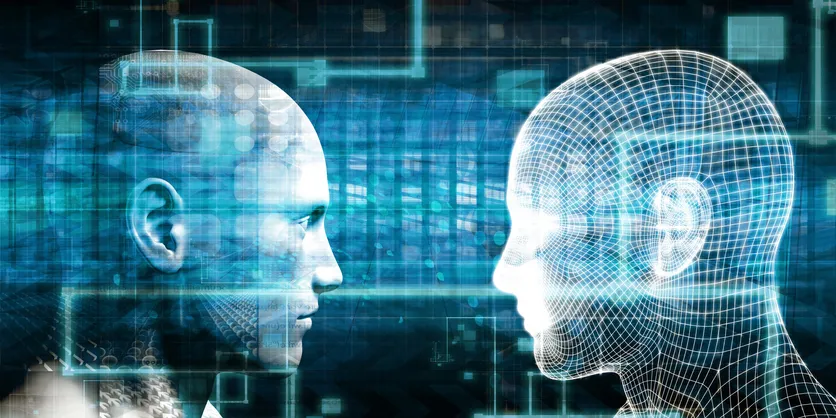

AI Relationship Mediator

An AI Relationship Mediator is an intelligent system designed to assist individuals or couples in resolving interpersonal conflicts, improving communication, and fostering emotional understanding. Unlike a chatbot that simply gives advice, a true AI mediator would function as a neutral facilitator — analyzing emotional signals, communication patterns, and linguistic context to guide people toward mutual understanding and constructive dialogue. In essence, it serves as a digital counselor that helps humans navigate emotional complexity through structured, data-informed insight.

Core Principles

The mediator operates on three key pillars:

- Emotional Intelligence (EI): The AI must detect, interpret, and respond appropriately to human emotions. This involves natural language processing (NLP) models tuned not just for semantics, but for tone, empathy, and sentiment.

- Neutrality and Fairness: Mediation requires neutrality. The system’s algorithms must be designed to avoid bias toward one participant’s gender, culture, or communication style.

- Confidentiality and Privacy: Conversations often contain sensitive personal data, requiring strong encryption, local data processing, and compliance with privacy regulations (like GDPR).

How It Works

An AI Relationship Mediator could analyze text, voice, or video input between partners or participants. Here’s how a typical mediation session might unfold:

- Input and Context Capture:

Participants describe the issue or upload a conversation transcript. The AI identifies emotional tone, key topics, and communication breakdowns — for example, patterns of blame, avoidance, or misinterpretation. - Emotional and Linguistic Analysis:

Advanced sentiment and affective-computing algorithms recognize frustration, sadness, defensiveness, or affection hidden in phrasing. For instance, it might detect that one person’s “You never listen to me” is an unmet-need statement rather than a personal attack. - Framing and Rephrasing Suggestions:

The AI suggests rewordings to make communication more constructive, e.g. turning “You’re always late” into “I feel anxious when plans change unexpectedly.” - Insight Generation:

It identifies recurring patterns (“In your last three discussions, both of you shifted topics when emotions rose”) and provides actionable insights grounded in communication theory or CBT (Cognitive Behavioral Therapy). - Resolution Modeling:

Based on past successful cases and psychological research, the AI proposes resolution frameworks — from mutual goal setting to guided empathy exercises. - Follow-Up and Reflection:

The system can track progress over time, noting improvements in tone or mutual understanding, while keeping all data encrypted and anonymized.

Technological Foundations

Such a mediator relies on multiple AI subfields:

- Natural Language Understanding: To interpret emotional nuance, ambiguity, sarcasm, and contextual meaning.

- Affective Computing: To infer emotion from voice tone, facial expressions, or word choice.

- Psycholinguistics Models: To analyze how language reflects cognitive and emotional states.

- Explainable AI (XAI): To ensure that the AI’s suggestions and conclusions are transparent, avoiding the “black box” problem in emotionally sensitive contexts.

- Federated Learning: To train models on diverse relationship data while preserving individual privacy.

Ethical and Legal Dimensions

The ethical design of an AI relationship mediator is as important as its technical sophistication. Key issues include:

- Privacy and Consent: Since mediation often involves personal data, strict consent and encryption protocols are essential.

- Non-Maleficence: The AI must never exacerbate conflict or offer harmful psychological advice. It should escalate cases to human professionals when signs of abuse or severe distress appear.

- Bias and Fairness: Relationships are shaped by culture, gender norms, and power dynamics. An AI trained predominantly on Western data, for example, might misinterpret communication patterns from other cultures.

- Legal Status and Liability: If an AI provides relationship advice that leads to harm or miscommunication, who bears responsibility — the developer, the user, or the platform? Regulatory frameworks will need to define this.

Potential Benefits

- Provides nonjudgmental support for couples reluctant to seek therapy.

- Offers on-demand mediation — available anytime, anywhere.

- Helps individuals improve communication skills by making emotional dynamics visible.

- Serves as a training aid for therapists or counselors to analyze patterns in anonymized form.

- Enables early conflict detection, potentially preventing relationship breakdowns.

Limitations and Risks

An AI cannot yet replicate the full empathy, intuition, and moral sensitivity of a human mediator. Emotional understanding depends on more than words — it includes subtle physical and historical contexts. There is also the danger of emotional dependency, where users replace genuine human dialogue with mediated AI interaction.

Moreover, if a relationship involves abuse, coercion, or manipulation, the AI must not act as a neutral middle ground — it should identify red flags and direct the victim to human help.

Unique Future Directions

- Integration with Wearables: Emotional signals from heart rate, tone, or body temperature could give the AI deeper insight into stress and arousal patterns.

- Cultural Adaptation Engines: The AI could learn local communication norms, humor styles, and emotional expressiveness levels to better interpret users’ intentions.

- Neurosymbolic Mediation Models: Combining symbolic reasoning (moral principles, empathy frameworks) with neural networks for both understanding and ethical grounding.

- Collective Relationship Mediation: Expanding from romantic relationships to family, team, or community conflict resolution.

Philosophical Dimension

The concept of an AI mediator forces a profound question: Can empathy be computed? If an AI can detect pain, frustration, and affection with precision, is it truly understanding — or merely simulating understanding? The answer affects not only relationship counseling but the entire field of human–AI interaction.

Post Comment